Healthcare decisions happen all day. A nurse decides who needs attention first. A doctor decides which test matters. An admin team decides how to schedule beds and staff. Now add software that can spot patterns fast and suggest the next step.

That is the core idea behind AI decision making in healthcare. It is not magic. It is a set of tools that can support judgement, speed up choices, and reduce missed signals, when used with strong checks.

The National Academies report a conservative estimate: 5% of U.S. adults who seek outpatient care each year experience a diagnostic error. A lot of decisions sit on limited time and messy information.

A clinician still has to act.

In many hospitals, decision making relies on:

That is not a bad system. It is just human. Humans get tired. Humans miss signals when the workload is heavy. This is where AI in healthcare decision making starts to show value, if it is built and used carefully. If you want more clinical scenarios, read this guide.

Ai is already showing up in regulated products, not only pilots. FDA stated it has authorized more than 1,000 AI-enabled medical devices, which explains why hospitals now see these tools inside imaging, monitoring, and clinical software.

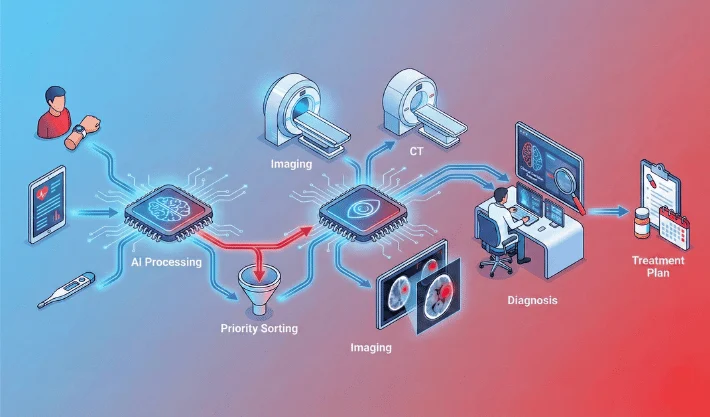

AI does not need to “replace” anyone to make a difference. It can sit inside the workflow and do small jobs that add up.

Some tools watch vital signs and lab values and flag risk early. The goal is simple: spot a patient who is getting worse before it becomes obvious.

Example: a ward team may get an alert that a patient’s sepsis risk has increased. A clinician still checks the patient and decides the action, but the alert helps prioritise attention.

The stakes are high in sepsis workflows. CDC says 1 in 3 people who die in a hospital had sepsis during that hospitalization, so earlier flags can help teams prioritise attention sooner.

In radiology and pathology, AI can highlight suspicious areas. It can also help sort scans by urgency, so critical cases move faster.

This is useful when imaging volume is high. It can also help reduce delay during busy shifts, as long as there is a clear review step by a specialist.

Some systems suggest care pathways or flag drug interactions. Others predict who may not respond well to a treatment plan based on patterns in similar cases.

This is where AI assisted decision making in healthcare needs extra caution. A suggestion can look confident even when the evidence is weak for a specific patient. The tool must show what it used and how strong the signal is.

A lot of care quality depends on operations. Bed availability, discharge planning, staffing, and theatre scheduling all affect patient outcomes.

Predictive tools can help estimate discharge timing, likely readmission risk, or no-show probability. For forecasting and risk scoring examples, see AI predictive analytics in healthcare. That supports smoother planning and fewer last-minute scrambles.

This kind of work is a big part of AI decision-making in healthcare, even though it is not “clinical” on the surface.

The biggest change is not that a model writes a diagnosis. The change is speed and visibility.

AI is good at finding weak signals across many variables. A clinician might not notice a small shift in multiple values across hours. A model can.

That said, pattern spotting is only useful when it leads to better action. Alerts must be relevant. Too many alerts create fatigue, and then everyone ignores them.

Good tools can reduce variation in simple decisions. They can encourage guideline-consistent actions, like recommended follow-up tests or risk checks.

This is helpful in busy settings, but it can also create “auto-pilot care” if teams stop thinking. The tool should support thinking, not switch it off.

When documentation, coding suggestions, and routine checks get lighter, clinicians can spend more time with patients. That is the ideal outcome, but it depends on how the tool is deployed and how the time savings are used.

Patients mostly feel the effects in three ways.

If risk flags are accurate and timely, deterioration can be picked up earlier. That can lead to faster treatment and fewer emergency escalations.

When imaging or lab work is triaged better, urgent cases can reach a clinician sooner. That helps reduce waiting and bottlenecks.

Some systems help produce patient-friendly summaries and next-step plans. This works best when a clinician reviews the output and adjusts wording.

AI can fail in quiet ways. That is why safety, governance, and monitoring matter.

If training data under-represents certain groups, performance can drop for those groups. That can create unequal outcomes. Teams need subgroup checks, not only overall accuracy. Use Ethics of AI in Healthcare to set fairness and accountability rules.

Hospitals change. Protocols change. Population patterns change. When inputs shift, model performance can degrade. Monitoring is not optional.

A confident-looking output can push a team toward the wrong action. This is one reason AI-assisted decision-making in healthcare needs clear uncertainty signals, clear escalation rules, and strong clinician oversight.

Health data is sensitive. Tool access must be role-based. Logs need protection. Integrations should follow local compliance needs and internal policy.

Teams often ask: how to audit AI decision-making in healthcare without turning it into a paperwork exercise. The answer is to audit the full loop, not only the model. Do the following:

1) Validate the clinical goal

2) Check data quality and representativeness

3) Run bias and subgroup checks

4) Test in a controlled pilot

5) Add monitoring and drift alerts

6) Confirm governance and accountability

Healthcare tools will keep moving closer to day-to-day workflows. More decisions will have a “second opinion” layer that runs quietly in the background. If your team is exploring AI decision making in healthcare, WebOsmotic can help you plan the right use case, build the workflow, and set guardrails so the tool supports care without creating new risk.

In most real deployments, AI suggests and flags. A clinician still owns the final call. The safest setups keep clear review steps and make overrides easy, so human judgement stays in control.

High-volume areas with clear signals often see early wins, like imaging triage and deterioration risk flags. Operational planning can also improve quickly because outcomes are easier to measure and test.

Overtrust is a common risk. A confident output can push teams toward the wrong action. Clear uncertainty cues, strong testing, and routine monitoring reduce that risk significantly.

Use drift monitoring. Track alert rates, override rates, and outcome alignment. When patterns shift, run a review and revalidation. Treat monitoring as ongoing clinical quality work.

Ownership, pilot evidence, subgroup checks, monitoring rules, incident process, and version tracking. Keep it practical and tied to real workflow steps, so teams can follow it during busy weeks.