Coding is changing fast. Not because programmers are disappearing, but because more of the repeatable work around coding can now run on autopilot. The big shift is that software can plan steps, run tools, and check its own output instead of only producing a one-off answer.

In the U.S., software work is still expanding. The Bureau of Labor Statistics projects 15% growth during 2024–2034 for software developers, QA analysts, and testers, with about 129,200 openings each year, so the bigger shift is how the work gets done.

In this guide, you will see how agentic AI fits into real development work, what skills still matter most, and what to watch out for when you let systems act on your behalf.

An agentic system can take a goal, break it into tasks, pick tools, take actions, and keep going until it hits a stop rule. It is planning plus execution plus feedback loops, with guardrails.

So, what is agentic AI in plain words? It is a setup where a model does not just answer. It decides the next step, does it, checks results, then repeats. For broader context before you go deep on agents, generative AI is a quick overview of use cases and limits.

A simple agentic AI definition that works in day to day software work is: “A system that can act toward a goal using tools and iteration, with guardrails.”

A model is the brain. An agent is the working setup around that brain.

So, what is an AI agent when you talk about coding? It is a tool-using loop that can read context, plan steps, call APIs, run tests, and write changes inside a controlled workspace.

Think of an agent like a junior developer with a super fast typing speed and no real world judgement unless you add rules. It can be helpful, but it needs boundaries. If you want a hands on build flow plus guardrails, see how to build an AI agent with ChatGPT.

Traditional coding flow is linear. You think, you code, you run, you fix. Agentic systems add a second worker that can keep moving while you focus on high value decisions.

Teams are already using agents for:

The best part is not “writing code for you.” It is shrinking the dead time between intent and a verified change.

This is already normal in many teams. Stack Overflow’s 2025 survey says 84% of respondents use or plan to use AI tools in development, and 50.6% of professional developers use them daily, so the workflow shift is already happening.

People mix these ideas up, so it helps to separate them. Here is the practical meaning behind agentic AI vs generative AI. For a clean starter primer your team can share, how to use generative AI covers the basics in plain steps.

Generative systems are great at producing content in one shot, like a function or a quick snippet.

Agentic systems are built to do multi step work, like plan, act, validate, and adjust.

If you ask for a login flow, a generative system might output a sample implementation. An agentic setup can also create the route, add tests, run them, fix failures, and open a pull request, as long as permissions and checks exist.

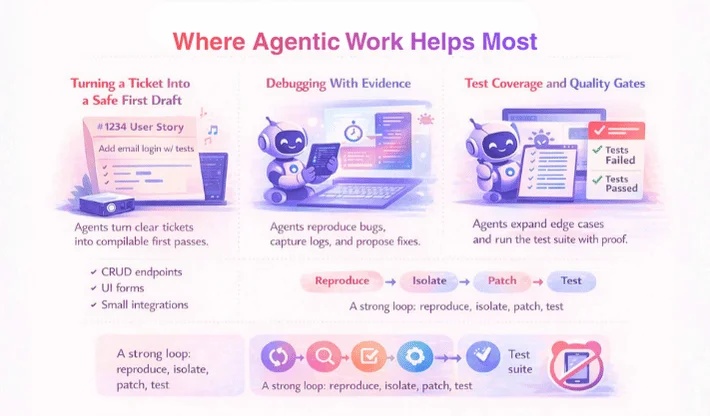

The easiest wins are tasks that are repeatable and easy to verify.

Give an agent a clear ticket plus codebase context and a few rules. It can produce a first pass that compiles and passes basic tests. You still review it, but you start with something concrete.

This works well for:

The trick is specificity. “Add login” is vague. “Add email login using our existing auth service, add tests, and keep the UI style” gives the agent a real target.

Good agents do not just guess. They run the code, capture logs, reproduce the bug, and then propose a fix. This saves time because the steps are visible and repeatable.

A strong loop is: reproduce, isolate, patch, test. The agent can do the repetitive parts while you sanity check edge cases.

Agents can generate test skeletons, expand edge cases, and run the suite. Insist on proof. Tests should fail before the fix and pass after the fix.

If your checks are weak, agentic work becomes risky. Strengthen tests and linters first, then scale up.

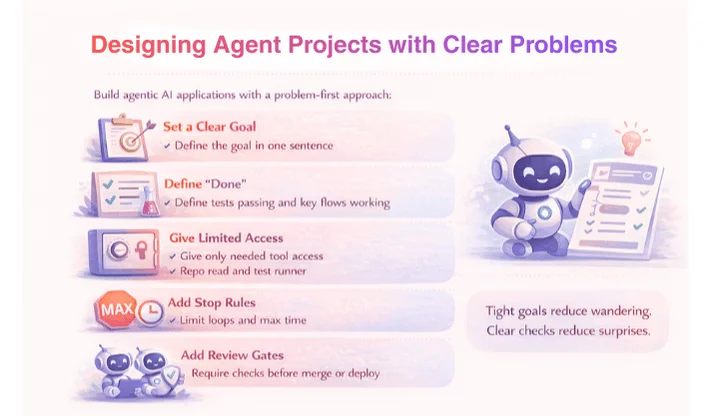

If you try to “add agents” as a feature, things get messy. Start with a job and define how success will be verified.

Here is a practical way to think about building agentic AI applications with a problem-first approach:

Tight goals reduce wandering. Clear checks reduce surprises.

A St. Louis Fed analysis using a U.S. survey reports almost 40% of adults aged 18–64 used generative AI in August 2024, and 28.1% reported using it at work.

Agentic work does not remove engineering skill. It shifts where it shows up.

This is not a “set it and forget it” shift. Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027, which is a good reason to start small, keep tool access tight, and demand proof via tests.

Because agents can take actions, failures can scale fast.

You do not need a complex stack. You need a controlled loop with visibility.

A good baseline is:

Start with low risk tasks. Let the agent create a pull request, not commit directly to main. Keep a human in the loop until checks are trusted.

Developers will still write code, but more time will go into orchestration and quality control. You will define intent, spot edge cases, and make trade-offs. If an agent can generate ten options in minutes, the value is in picking the right one and proving it is safe. If you are planning budget and scope, custom AI development cost gives practical ranges and cost drivers.

And yes, agentic AI will become normal in IDEs and CI pipelines, because it matches how software is already built: tools, tests, and reviews.

If you want to ship this kind of workflow safely, WebOsmotic can help you design guardrails, pick a practical tool stack, and integrate agents into delivery without risking quality.

Not in any reliable way. They can handle chunks of work, but they still need human intent, review, and accountability, especially around security and product decisions.

Pick a task with clear checks, like adding tests or creating a small endpoint. If you can verify success with tests and lint, you can run the loop safely.

They treat tests and reviews as the truth. Agents should run tests after edits, and humans should review diffs with a checklist tied to product and security rules.

Add stop rules: max iterations, max time, and max cost. Also add “ask for help” triggers when the agent sees repeated failures.

Get strong at writing crisp specs, reviewing code quickly, and designing safe tooling. Those skills transfer across any agent framework.